Our everyday tasks are increasingly digital, supported by tools and services that are based on some remote server farm. How do we assess the carbon footprint left by data centers?

It's hard to function in modern life without the 'cloud'. Our everyday tasks are increasingly digital, supported by tools and services that are based on some remote server farm. The cloud, after all, is just someone else's computer (or server).

Now it's certainly the case that the cloud helps enable a fairly low-carbon footprint, allowing people to accomplish a lot without burning fuel to get anywhere, like working from home or navigating more efficiently to avoid traffic jams. At the same time, it's easy to forget that the cloud has its own carbon footprint, left by data centers buzzing with digital activity.

"At the end of the day, the internet is running on data centers, and from an operational perspective, the data centers are running on energy," Maud Texier, Google's head of clean energy and carbon development, tells ZDNET. "So, this is the primary source of greenhouse gas emissions -- when someone is using the cloud, is typing an email and creating something new."

Also: What is cloud computing? Everything you need to know

Before attempting to determine how green the cloud is, it's worth revisiting just what exactly the 'cloud' is. This somewhat cryptic tech term simply refers to computing services delivered over the internet. That definition covers everything from applications like Instagram or Google Search to foundational computing services like processing power and data storage. Companies can decide to manage their digital operations on their own servers (typically in an on-premises data center) or via a cloud provider like Google Cloud, Amazon Web Services or Microsoft Azure.

More data doesn't equal more energy consumption

Given the way the digital economy has exploded over the past two decades, it'd be easy to assume that the cloud's carbon footprint has also spiked. Luckily, that's not the case.

Research published in 2020 found that the computing output of data centers increased 550% between 2010 and 2018. However, energy consumption from those data centers grew just 6%. As of 2018, data centers consumed about 1% of the world's electricity output.

The tech industry has managed to keep its energy consumption requirements in check by making huge energy efficiency improvements, as well as taking a range of other strategic moves.

Cloud vs data centers

Cloud migration has been huge -- the share of corporate data in the cloud jumped from 30% in 2015 to 60% in 2022.

But mostly organizations aren't moving to make their operations more sustainable, notes Miguel Angel Borrega, research director for Gartner's infrastructure cloud strategies team.

"There are other variables that are even more important than sustainability," he says to ZDNET -- such as cost savings or the ability to leverage the latest technologies from cutting-edge innovators like Google and Microsoft. That said, sustainability ends up as a clear benefit as well.

"When we compare gas emissions, energy efficiency, water efficiency, and the way they efficiently use IT infrastructure, we realize that it's better to go to the cloud," Borrega says.

One major reason service providers could run more efficiently, he says, is simply that their infrastructure is newer and more efficient. Many existing corporate data centers are 30 or 40 years old, meaning they aren't taking advantage of more recent gains in energy efficiency.

Renewable energy

One of the main drivers for reducing greenhouse gas emissions is using renewable sources of energy. Traditional data centers are normally powered with energy from fossil fuel sources, but new cloud regions are increasingly tapping renewables.

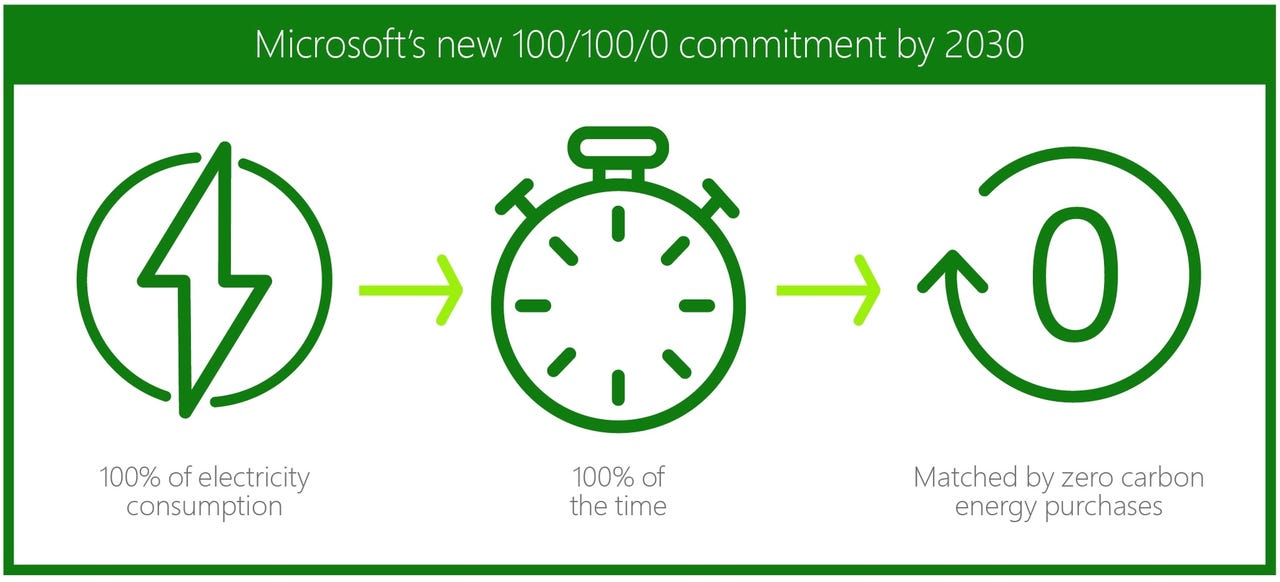

In cases where they can't use renewables, cloud companies are now often committed to compensating for their energy use with zero-carbon energy purchases, or carbon credits -- effectively investing in future carbon-free uses. For example, Microsoft has pledged to have 100% of its electricity consumption matched by zero-carbon energy purchases by 2030.

"Like other users, our datacenters and our offices around the world simply plug into the local grid, consuming energy from a vast pool of electrons generated from near and far, from a wide variety of sources," Microsoft executives wrote at the time. "So while we can't control how our energy is made, we can influence the way that we purchase our energy."

Amazon, meanwhile, says it's on trajectory to power all of its operations with 100% renewable energy by 2025. That includes Amazon's operations facilities, corporate offices, physical stores as well as Amazon Web Services (AWS) data centers. It says it's committed to reaching net-zero carbon across its operations by 2040.

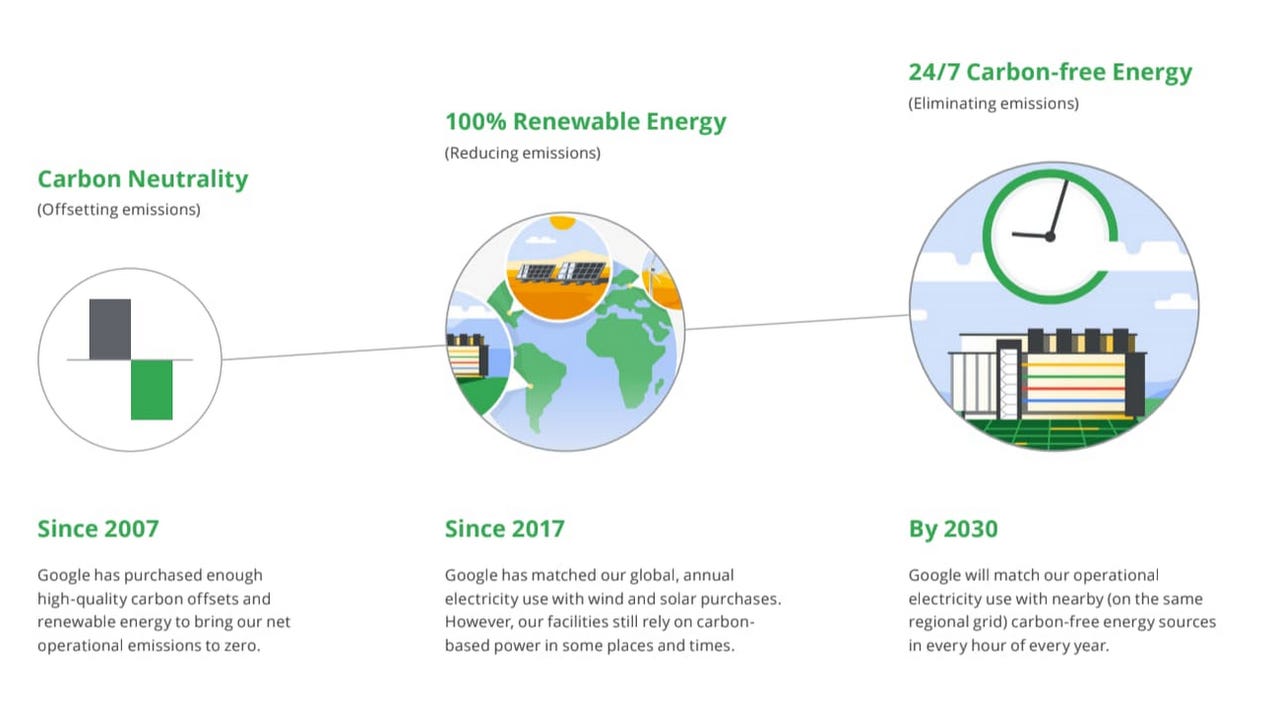

Google started its cloud sustainability efforts in 2007 by purchasing high-quality carbon credits. In 2010, it began finding clean energy sources and adding clean energy to the grid to compensate for its consumption. And since 2017, the company has been buying enough renewable energy to match its consumption.

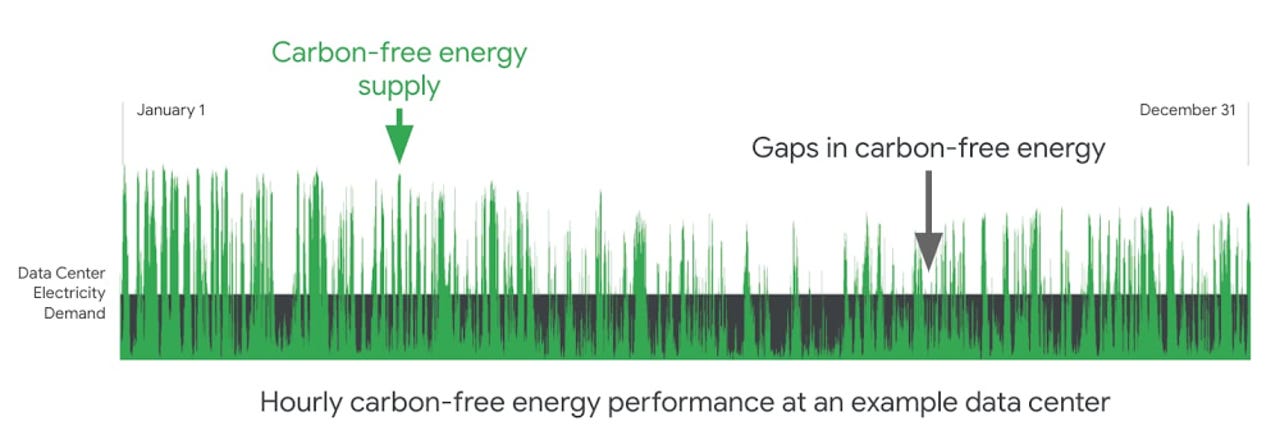

In 2020, Google began tracking a new metric, the carbon-free energy percentage (CFE%). This metric represents the average percentage of carbon-free energy consumed in a particular location on an hourly basis, while taking into account the carbon-free energy that Google has added to the grid in that particular location. So for businesses, the CFE% represents the average percentage of time their applications will be running on carbon-free energy.

Google also set a goal in 2020 to match its energy consumption with carbon-free energy (CFE), every hour and in every region by 2030. As of last year, Texier says about two-thirds of Google's energy consumption relied on CFE.

"There's still more work to do," she says. "It's going to be much more regional -- how do we talk with regional stakeholders and utilities as they try to change the grids?"

Where is your cloud running?

Location is an important aspect to consider for anyone trying to assess just how 'green' a specific cloud is, as Trexier suggests. Some of Google's data centers, in places such as Finland, Toronto and Iowa, have a CFE% above 90. Others, such as data centers in Singapore, Jakarta and South Carolina, are closer to 10% or 20%.

"This is one of the biggest realizations that came to us when we switched from this global annual goal to this small, more specific, 24/7 goal," she says. "That actually, there's a very large variability within the portfolio. And we have to be much more surgical in terms of the roadmap for each data center."

In places such as the Asia Pacific region, Texier says the barriers to greater renewables adoption are often geographical -- there's just not a lot of space to create renewable energy. Instead, energy providers have to build "islanded grids" that provide energy from sources such as offshore wind, which is more expensive and built on newer technologies.

Meanwhile, in places like the US South, Texier says there are fewer options for energy customers like Google to purchase green energy.

"Big picture right now, there's a lot of demand for renewable energy, not just from Google, but from a lot of corporations," she says.

"It's really been a booming market, which on one side is is really helpful to accelerate the deployment of more renewable energy. On the other side, what we are realizing now is that the needs of deployment of clean energy and renewable energy cannot be met with the current processes that we have."

Getting more efficient

While cloud providers work with the energy sector and regulators to create more renewable energy options, they're also getting more efficient at running their operations. A data center requires a great deal of power to run workloads, maintain data storage, run cooling systems, distribute energy, and so forth. With advances in areas such as refrigeration and cooling systems, cloud providers can dedicate more energy to providing computing power.

At the same time, cloud providers can offer efficient server utilization.

"Imagine you have a server that can support 100 workloads," Borrega says. "Normally what we see is that to run this basic volume of workloads, on average [data centers] use only 40% of their computing resources. But we are powering it with all the energy to support this potential functionality. So in data centers, normally IT infrastructure is used on average at 40%. When we move to cloud providers, the rate of efficiency using servers is 85%. So with the same energy, we are managing double or more than double the workloads."

Meanwhile, cloud providers are running workloads more efficiently as they design new technologies. AWS, Google and others are building their own custom chips and hardware to give customers the most computing power while using the least possible energy.

Courtesy: https://www.zdnet.com/

No comments:

Post a Comment